Lalit Ahluwalia is committed to redefining the future of cybersecurity by helping large, medium, and small-scale businesses build digital trust. Here, Lalit discusses the hype around generative AI while exposing the cybersecurity risks they pose to the average user and how to manage them with responsible AI.

You’ve likely heard about the recent generative AI hype. Tools like DALL-E 2, ChatGPT, and others can generate remarkably human-like text, images, and more with just a text prompt. While the creative potential is exciting, these powerful systems also introduce new cyber risks that you should be aware of.

Generative AI systems are rapidly advancing, thanks to large language models and deep learning techniques. However, in the wrong hands, these systems could be used for malicious purposes like automating disinformation campaigns, impersonating people online, and automating cyberattacks.

A Question of Trust

Recent reports have shown that the use of generative AI poses several security risks, thus eroding digital trust. For instance, Anthropic’s popular AI chatbot – known as Claude – was found to make up convincing lies in response to questions. Though not intended to be malicious, it shows how such systems could deceive if misused. Similarly, AI image generators are being used to create fake celebrity porn and political deepfakes.

According to CBS News, February 2024 got the Internet community shocked with AI-generated nude pictures and deepfakes of popular celebrity singer Taylor Swift. This demonstrates the potential to exploit generative AI for identity theft, fraud, and scams. Imagine the danger this misuse of AI poses to every child with a smartphone out there. However, this menace has raised a lot of buzz in the security community as we know it today. See: A Solution to the Problem of Deepfakes

More chilling are cases where flawed AI has caused real-world harm leading to death. Let’s consider autonomous vehicles for instance. Self-driving cars, while promising, have been involved in deadly accidents due to perception failures. One such remarkable example is the October 2023 incident where in San Francisco, General Motors’ autonomous Cruise vehicle struck and dragged a jaywalking pedestrian by 20 feet – exacerbating her injuries.

IoTNews reports that this incident is currently being investigated by the Department of Justice (DoJ) and the Securities and Exchange Commission (SEC). AI-powered recruiting tools have been found to discriminate unfairly also, as revealed by a recent NPR report. These incidents reveal the dire real-world consequences if AI risks are not managed properly.

What does this mean for you as an AI user or business leader? With generative AI advancing rapidly, cyber risks are escalating. Malicious actors could leverage these powerful technologies to automate cybercrime. Even with good intentions, negligence in training AI responsibly can lead to harmful outcomes. Hence the need to manage cyber risks posed by AI.

Cyber Risk Management with Responsible AI

Responsible AI offers a proactive approach to governing AI that manages risks. Also called ethical AI, trustworthy AI, or AI safety, responsible AI aims to create AI systems that are lawful, ethical, robust, and socially beneficial.

Managing cyber risks is critical as AI becomes ubiquitous. Flawed or misused AI can directly endanger individuals and groups. It can also enable crimes like fraud, theft, and misinformation at scale. These risks expand as AI systems grow more capable and autonomous.

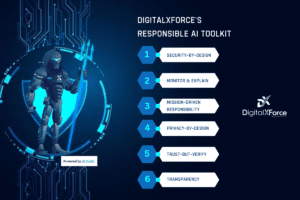

Responsible AI helps manage risks by addressing safety and security from the initial design stage. It provides frameworks to build AI that is technically robust, unbiased, transparent, privacy-respecting, and aligned with ethics and human values.

For example, robust AI requires extensive testing to ensure reliable performance in the real world. Developing transparent AI systems that can explain their reasoning also makes them more trustworthy. Designing AI with privacy, fairness, and ethics in mind mitigates harmful misuse.

Integrate responsible AI to manage cyber risks and build trust in your organization by adopting the following best practices:

- Perform rigorous cybersecurity testing to safeguard AI from data poisoning, model theft, and adversarial attacks.

- Implement explainable AI methods that allow humans to understand AI behavior.

- Monitor AI systems through their lifecycle to detect harm early and continuously improve safety.

- Democratize AI development by including diverse perspectives to reduce harmful bias.

- Adopt frameworks like Responsible AI Principles and Model Cards for Ethics to align AI with human values.

Responsible AI in Action

Responsible development practices are already being adopted by industry leaders. Microsoft requires its engineers to implement responsible AI reviews covering aspects like fairness, security, privacy, inclusiveness, transparency, accountability, and reliability.

IBM’s AI FactSheets provide details about how its AI models were built, tested, and their recommended uses. Google recently open-sourced a toolkit called Responsible AI for Health to help develop safer medical AI.

These exemplify how responsible AI can be integrated into business workflows to manage risks. With cyber threats on the rise, it is prudent for organizations to incorporate responsible AI as part of their risk management and cybersecurity strategy. Resources like the Ethical OS Toolkit, OECD AI Principles, and Azure Responsible AI can help get you started.

The Way Forward

As AI capabilities grow exponentially, managing the ensuing cyber risks is crucial. Irresponsible use of generative AI can endanger people and enable cybercrimes. Responsible AI offers principles and practices to develop AI safely, ethically, and securely.

By making responsible AI part of your cybersecurity approach, you can harness AI’s benefits while managing its risks. The time is right for organizations to future-proof with responsible AI. With vigilance and care, we can cultivate AI that works for the benefit of humanity.